Apr 11, 2025

Aiven for PostgreSQL® Performance Benchmarks Across Cloud Offerings

Aiven for PostgreSQL® - State of the Union 04/2025 - Performance benchmark comparison on GCP, AWS, OCI, and Azure

Dirk Krautschick

|RSS FeedSenior Solution Architect with Aiven

Aiven offers a seamless multi-service cross-cloud experience. When optimizing your infrastructure performance, understanding how services performance can change across clouds can help differentiate your business. This blog details the benchmarking of how PostgreSQL performs on each of our cloud offerings: AWS, GCP, OCI, and Azure.

We're committed to giving developers full visibility into PostgreSQL performance. That's why from now on, we will publish detailed benchmarks comparing PostgreSQL instances across major cloud providers. We've ensured fair comparisons using similar configurations and varying database sizes. By sharing these results, we aim to give you the data needed to make confident decisions about your deployments and optimize for performance. Below are the plan sizes that we used throughout this excercise.

Across AWS, OCI, Azure, and GCP:

| Plan | CPUs | RAM (GB) | Storage |

|---|---|---|---|

| Startup-4 | 1/2 | 4 | 80 |

| Startup-16 | 2 / 4 /6 | 14 / 15 / 16 | 350 |

| Startup-32 | 4 / 8 | 28 /31 / 32 | 700 |

| Startup-64 | 8 / 16 | 61 / 64 | 1000 |

Methodology

To examine performance, our selected metric for the comparison per database is transactions per second (TPS) while vertically scaling up the nodes as represented with four selected Aiven plan sizes. TPS is well established in the database benchmarking field and is the industry standard.

In this first iteration, we have generated the benchmark load using the PostgreSQL included tool pgbench, which always runs on a VM (e.g. an EC2 instance for AWS) representing an application client in the same cloud, region, and availability zone.

The VM instances were configured with 4 cores and 16 GB RAM running Ubuntu 22.04. The only requirement is the installation of PostgreSQL binaries to have pgbench available.

To minimize the effects of variable latency on our benchmark, we used a comparable central Europe region (basically Frankfurt) for each provider, each PostgreSQL instance and the client VM:

- AWS: eu-central-1

- GCP: europe-west3

- Azure: germany-westcentral

- OCI: eu-frankfurt-1

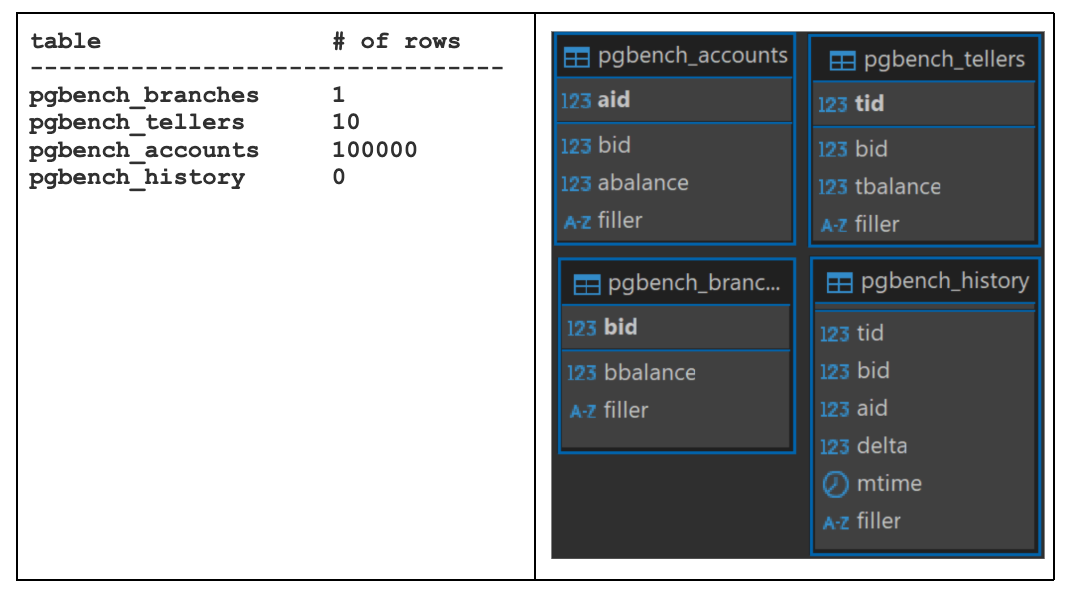

pgbench uses a TPC-B © sort of based OLTP workload which contains performing each five SELECT, UPDATE and INSERT operations per transaction on 4 tables. The amount of rows is based on the later described scale factor.

Generating the workload

To prepare some workload pgbench is able to generate random data in the initialization phase based on a defined scale factor. Increasing that factor by the amount of rows each table multiplies the same so that it's possible to create databases in several sizes.

pgbench -i -s $SCALE \ "postgres://<USER>:<PASSWORD>@<HOST>:<PORT>/<DATABASE>"

The -s/--scale parameter was adjusted to accommodate each plan; in this case, the DB size was roughly the same as the size of RAM of the related plan, with the possible values of 500, 1500, 2500, and 4500.

The performance of generating that data could be an alternative metric for performance measuring for future steps. As a rule of thumb, the time for creating the data for startup64 plans (around 65 GByte) usually takes 8 to 16 minutes on these tests.

Running the benchmark

For creating an application-based scenario pgbench offers the capability to force several parallel client sessions and threads. For these benchmarks, we’ve decided to keep 2 threads ($THREADS) as given and to configure the amount of client sessions ($CLIENT_CNT) as the same like the related plan name, descriptively 4, 16, 32, and 64.

To verify a realistic and un-noised result, we have repeated the benchmark three times for a lasting 5 min run and three times for a lasting 60 min run, measuring the average of all results as the final result.

pgbench -c $CLIENT_CNT -j $THREADS -P 60 -r -T $BENCH_TIME \ "postgres://<USER>:<PASSWORD>@<HOST>:<PORT>/<DATABASE>"

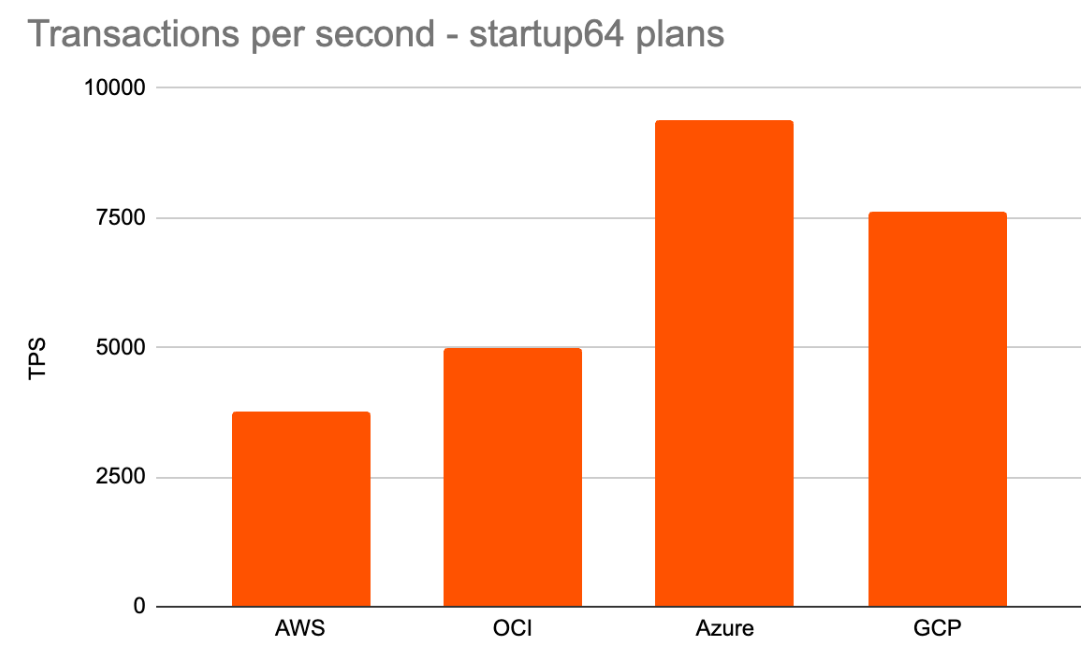

Aiven results

Starting with the results of this benchmark run for the four selected plan sizes of Aiven for PostgreSQL on the four hyperscalers.

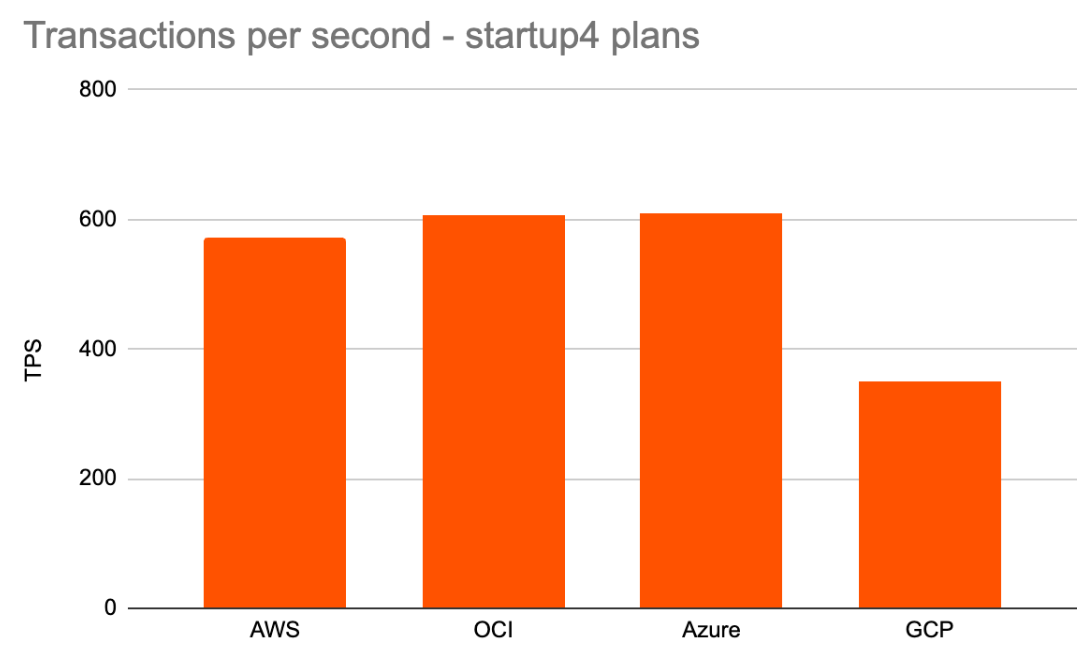

Aiven Startup-4 Plans

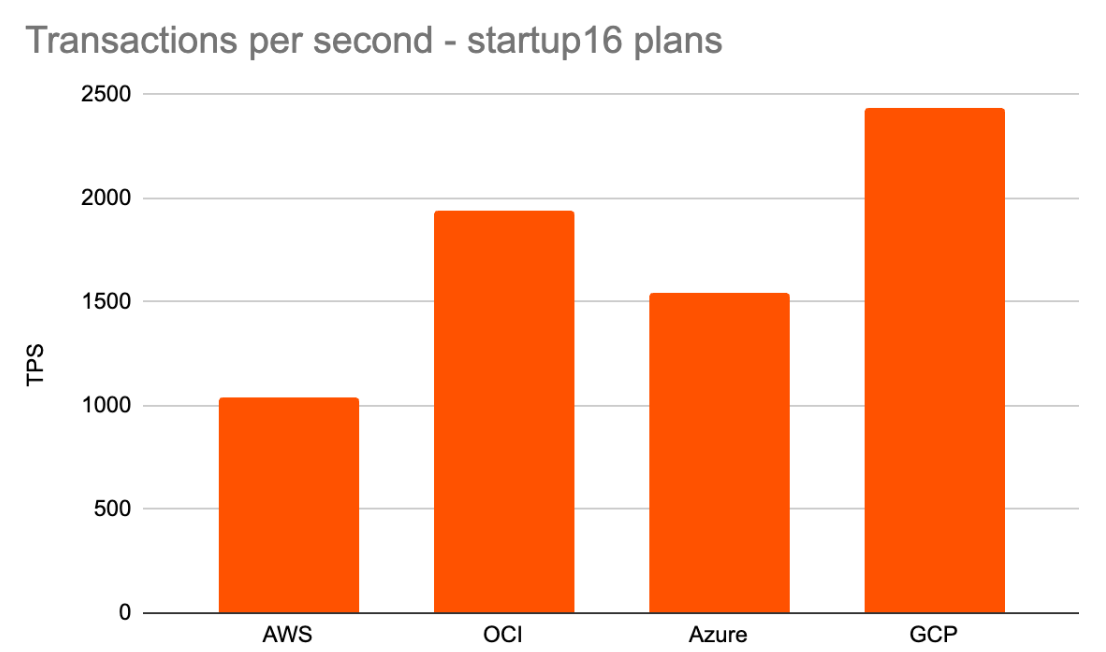

Aiven Startup-16 Plans

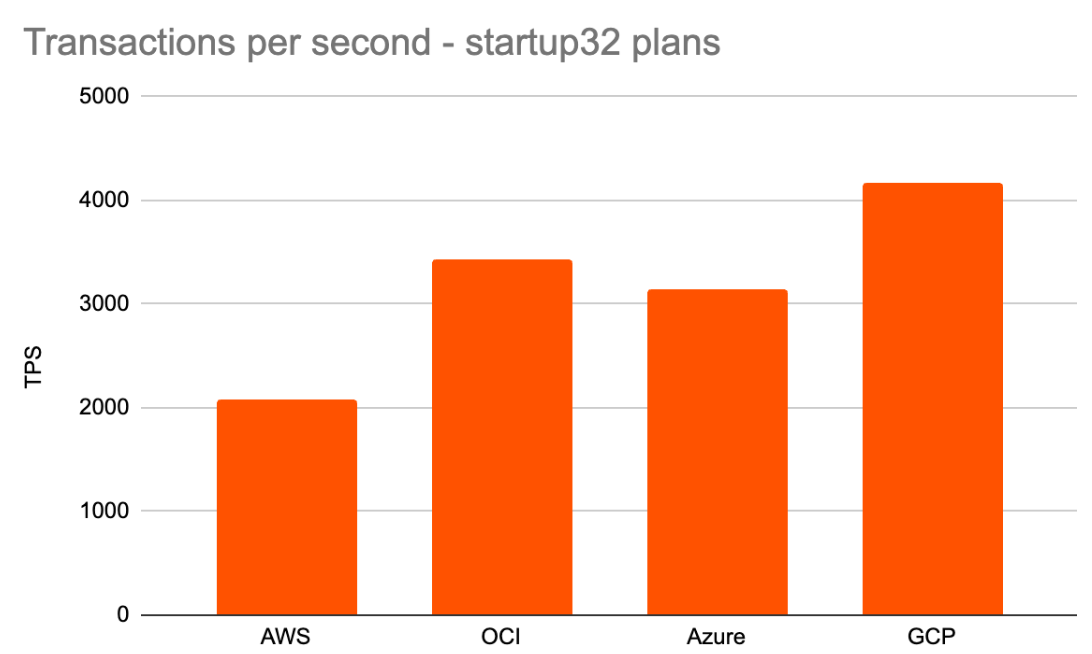

Aiven Startup-32 Plans

Aiven Startup-64 Plans

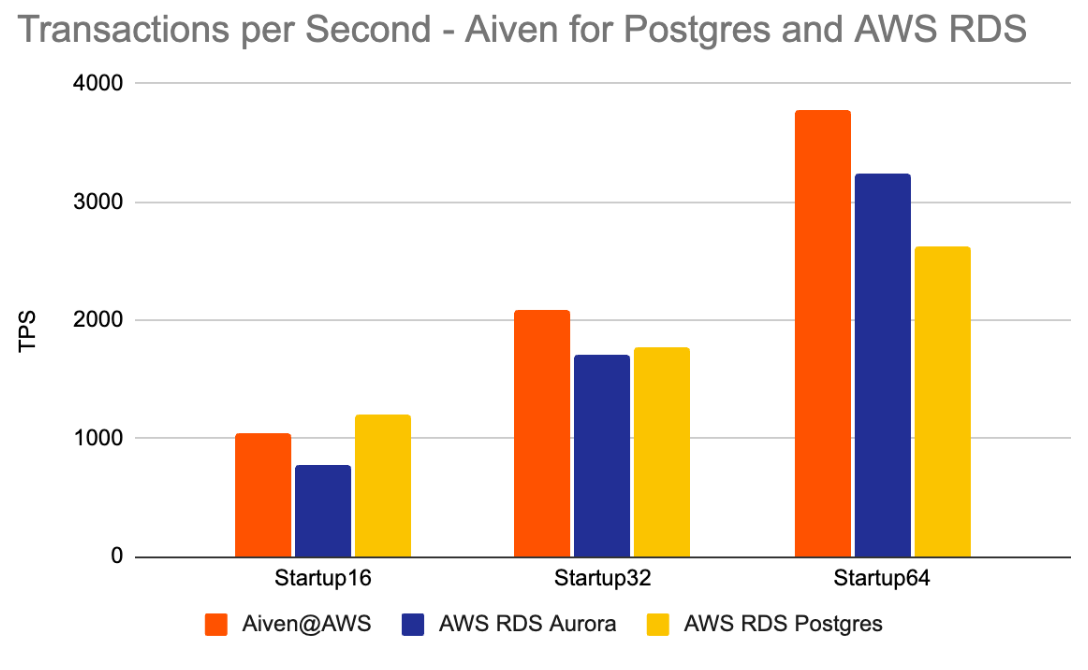

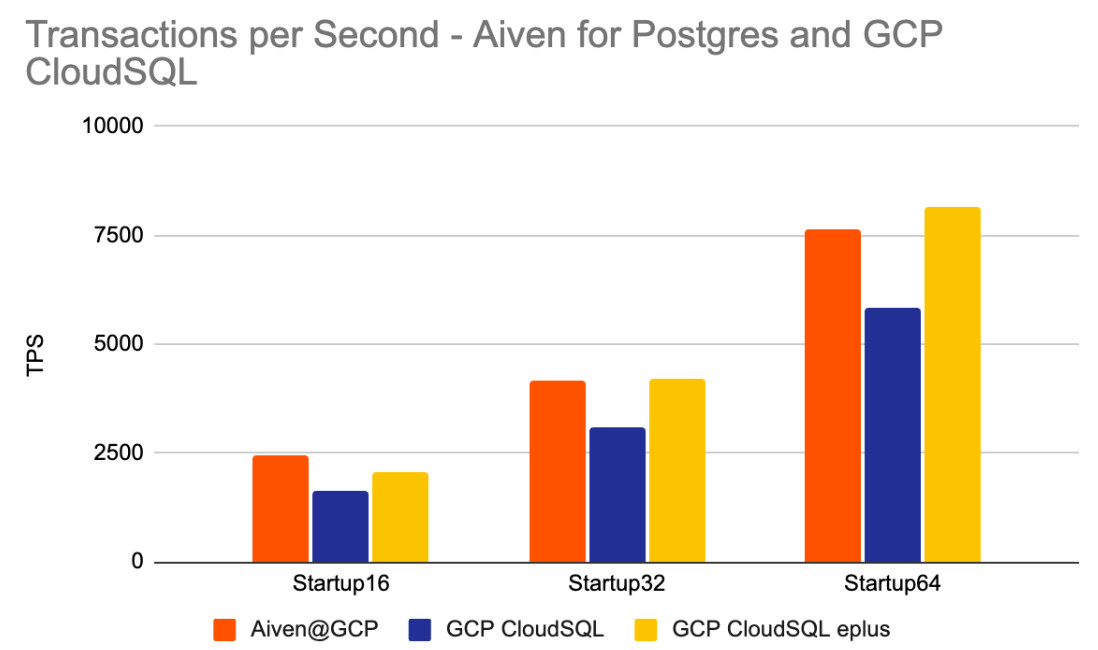

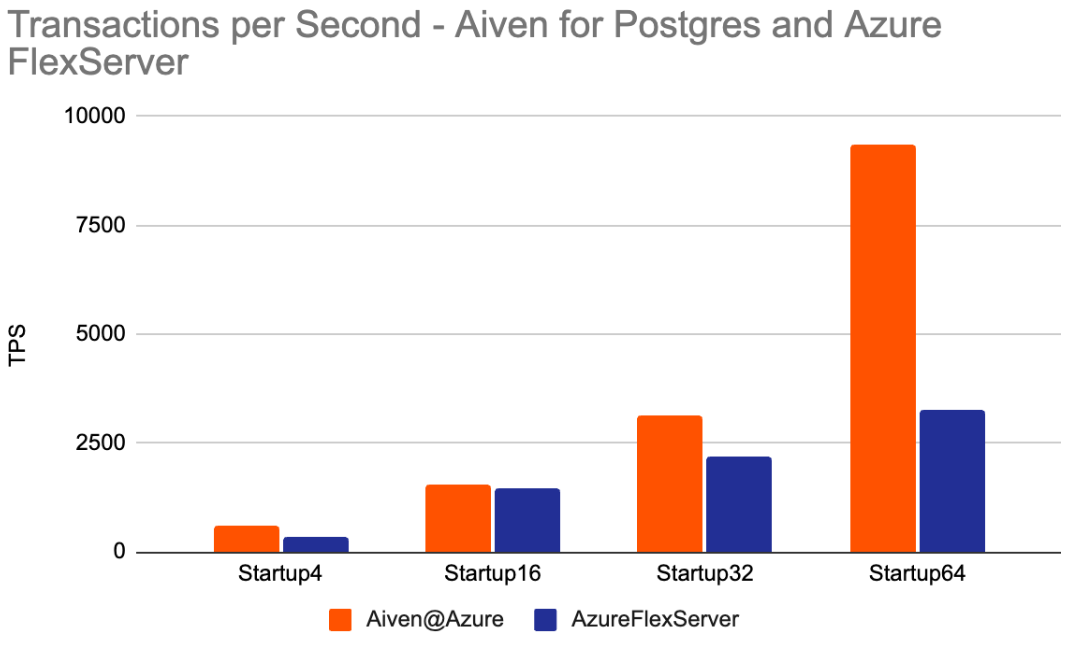

Let's have a look on the other side of the fence

To understand the results, we also did similar benchmark runs on the PostgreSQL based fork offerings of the Hyperscales.

Aiven vs. AWS RDS Postgres and AWS RDS Aurora

Aiven vs. GCP CloudSQL and CloudSQL Enterprise Plus

Aiven vs. Azure Flex Server

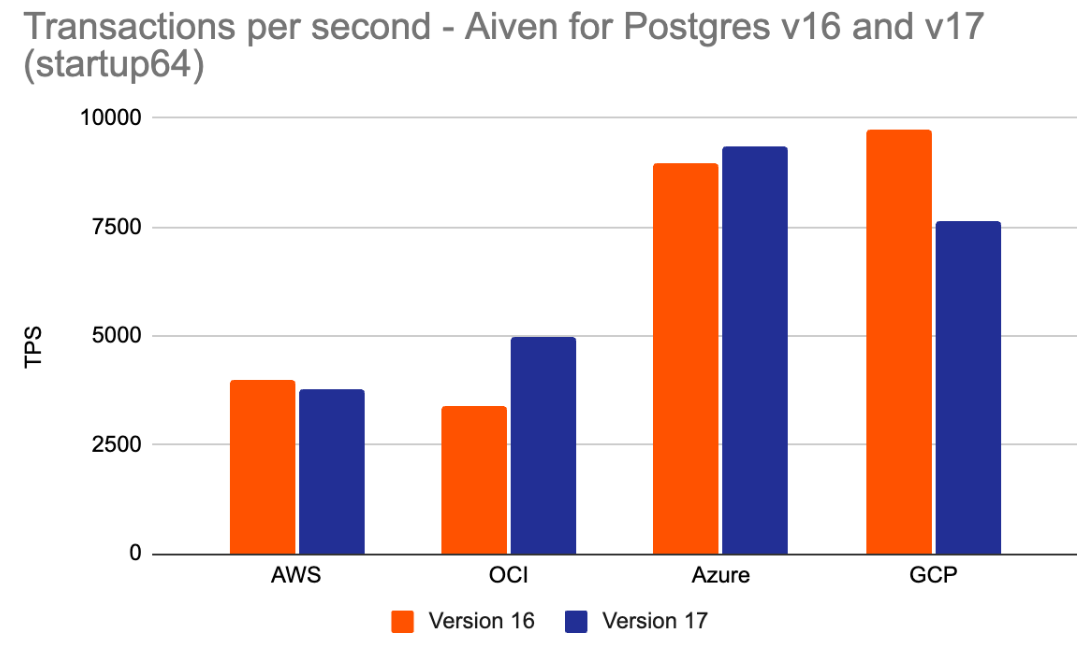

What about Aiven for PostgreSQL v16 vs. v17

When you upgrade to PostgreSQL 17, you can experience a tangible performance boost. Most of our benchmarks show a consistent 5 -10 % increase in transaction throughput over version 16, with potential for even higher gains on specific plans, enabling you to handle more workload with the same resources.

- Right now We are investigating some of the unexpected abnormalities between v16 and v17 with GCP and AWS, to be updated once we know more.

A few words about latencies

All benchmark tests are very sensible about the overall network latency. That’s the reason why we have used a client VM on each hyperscaler, region, AZ , and so on. To be fully transparent about interpreting the results, we have measured the average

latencies for each test run as well.

| Benchmark Run | ms |

|---|---|

| Aiven for PostgreSQL @ AWS | 13.75 |

| Aiven for PostgreSQL @ OCI | 9.00 |

| Aiven for PostgreSQL @ Azure | 8.69 |

| Aiven for PostgreSQL @ GCP | 8.61 |

| AWS RDS PostgreSQL (r8g) | 19.13 |

| AWS RDS Aurora 16 | 17.24 |

| Azure Flex Server | 13.57 |

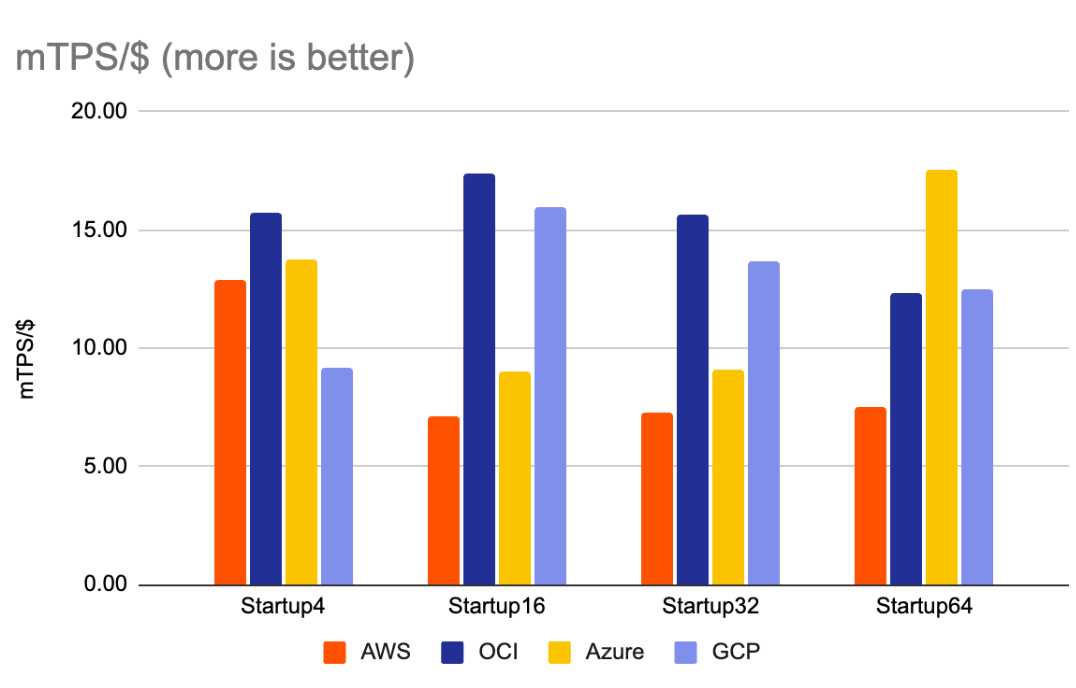

mTPS/$ average per service plan size

Finally, we concluded the benchmark by calculating the average mTPS/$ per PG plan according to publicly available pricing information. With the actual selected industry standard compute instances, we use the performance value that was so far represented as pure transaction per seconds. So, let’s now dig deeper into the value per price.

| Plan | Price/month $ | TPS | Avg Price/hour $ | mTPS/$ |

|---|---|---|---|---|

| pg17-aws-startup-4 | 115 | 572 | 0.16 | 12.89 |

| pg17-aws-startup-16 | 380 | 1042 | 0.53 | 7.11 |

| pg17-aws-startup-32 | 740 | 2084 | 1.03 | 7.30 |

| pg17-aws-startup-64 | 1300 | 3772 | 1.81 | 7.52 |

| pg17-oci-startup-4 | 100 | 608 | 0.14 | 15.75 |

| pg17-oci-startup-16 | 290 | 1941 | 0.40 | 17.35 |

| pg17-oci-startup-32 | 570 | 3435 | 0.79 | 15.62 |

| pg17-oci-startup-64 | 1050 | 4985 | 1.46 | 12.31 |

| pg17-azure-startup-4 | 115 | 610 | 0.16 | 13.74 |

| pg17-azure-startup-16 | 445 | 1547 | 0.62 | 9.01 |

| pg17-azure-startup-32 | 895 | 3149 | 1.24 | 9.12 |

| pg17-azure-startup-64 | 1385 | 9372 | 1.92 | 17.54 |

| pg17-gcp-startup4 | 99 | 353 | 0.14 | 9.24 |

| pg17-gcp-startup16 | 395 | 2412 | 0.55 | 15.82 |

| pg17-gcp-startup32 | 790 | 4874 | 1.10 | 15.99 |

| pg17-gcp-startup64 | 1580 | 6922 | 2.19 | 11.35 |

As you can see in the calculation above, the results of how many transactions per second you’ll get for the actual price point is not implicit the same to the overall performance results of the benchmarks. If the best price/performance rating is key for the cloud selection in this chart the higher result is the better rating per specific plan size.

Wrapping up

In a data-driven world, benchmarks have continued to be pivotal in understanding the full picture. When deciding on what cloud or region to run in, it's crucial to understand the differences in performance. With the evolution and frequent changes of underlying compute instances, the results will differ in all directions between the several hyperscalers. Even while everything is running on dedicated non-shared resources with stable latencies, we recognized abnormalities in the results where we’ll continue investigating. In this benchmark field test, the basic idea was to generate a significant load on tested services using a large number of parallel client connections so that you are armed with this crucial knowledge.

Keep in mind, this is just one possible representative study with a selected workload to give just an impression of the database performance. Every workload on the field could create a whole different picture and has to be evaluated separately. But to get an indication about the basic performance, this test run should give valuable insight for decisions.

We always recommend that standalone PostgreSQL users identify performance bottlenecks and tune their database configuration, workload or hardware configuration to optimize. This can be a time-consuming task.

At Aiven, we were founded by developers to solve for the challenges that they face. This is why, in our managed PostgreSQL services, PostgreSQL system parameters come preconfigured for typical workloads, and you can try out different hardware configurations by choosing different Aiven for PostgreSQL plans. You can even add in our free feature of AI Insights that analyzes your database's performance and will write performant code 100x faster by providing developers with index and SQL rewrite recommendations.

As a standard practice, we recommend you to run your own benchmarks, and we will soon publish our Terraform benchmarking scripts that allow easily repeating similar performance tests.

Stay tuned for additional blog posts about benchmarking our services, like this one, coming soon!

What's Next?

On the next iterations, we’ll introduce results with other workloads provided by alternative benchmark suites like HammerDB. That will provide more insights about the performance capabilities for more OLAP / Analytical workloads and opens the door for comparisons even with other databases, like MySQL and Oracle Database.

Additionally, we will document the related compute instance in the next step to make sure that this benchmark field test series is a valid read all the time to observe the performance changes over longer time periods.

We also want to extend the tests on a parameter settings level for first measuring sets of performance improving configuration tweaks for specific workloads and second to make better comparisons with the hyperscaler fork offerings.

Your next step could be to check out Aiven for PostgreSQL.

If you're not using Aiven services yet, go ahead and sign up now for your free trial at https://console.aiven.io/signup!

In the meantime, make sure you follow our changelog and blog RSS feeds or our LinkedIn and Twitter accounts to stay up-to-date with product and feature-related news.

Stay updated with Aiven

Subscribe for the latest news and insights on open source, Aiven offerings, and more.