Oct 1, 2020

Aiven Terraform provider joins the Terraform registry with v2

We’re marking our Terraform two year anniversary with v2 of our provider and making it official with a registry listing. Read more about what all of this means.

Chris Gwilliams

|RSS FeedSolution Architect at Aiven

You may remember that we released the first version of the Aiven Terraform Provider way back in 2018. We’re actually on the cusp of its two year anniversary mark, so I thought I’d pop by and reminisce about what it is and show you what you can do with it, as well as what’s new.

Before we get reacquainted with Terraform, let’s go ahead and get the updates out of the way.

Aiven’s Terraform provider — two years later.

- Automatic installation process through the Terraform registry (no more downloading a release from Github!)

- New

resourceshave been added, such as accounts, Kafka schemas and VPC peering - Documentation has been added to the repository with examples for various services

- Support for managing MySQL databases

- Termination protection for Aiven services

This is just a snippet but you can see the whole list of updates all the way from 2018 to now at its Github repository. But enough about what’s new, let’s remind ourselves of what Terraform truly is and what it means to those who use it.

What is Terraform, really?

Terraform is part of a trendy little movement, known as Infrastructure as Code. This means that you can write out the architecture of your systems in a structured language, known as Hashicorp Configuration Language.

They say a code snippet is worth a 1000 words so how about a code snippet within an image?

Setting up Terraform can be done through their Cloud offering or through the CLI

Because Kubernetes gets to be called k8s and Terraform is a word I do not want to type in my terminal 40 times a day, I found it useful to set this up:

So, what does it do?

Terraform is a tool that has been created to remove all of the bash scripts and webhooks and listeners you wrote to deploy your software. Providers exist for a number of different companies online that you use (yes, Aiven is there, but so is AWS, GCP and many more).

If you have trusted different components of your architecture to different experts throughout the Interwebs; then Terraform is the glue that holds them all together. “Does that mean I have to write everything again?” I hear you cry. No! Terraform allows you to import existing resources that you have deployed and treat them as if they were made right there in your script.

You might be thinking, “So, it’s Ansible for hipsters.” Maybe. Maybe not. Technology is moving fast and it shows no sign of slowing up. Public cloud provider usage increases year over year and anything that simplifies the interaction with these tools is a win.

Terraforms USP is that it stores the state of all the pieces that make up your infrastructure and compares that state with the changes that you want to make. If any of those changes will result in the destruction of something you did not intend, then you will be told before making the change and saving yourself thousands of alerts.

Equally, running the same plan again will tell you what has changed and the effect of reverting it back to the state you requested if a change is made outside of Terraform. It’s not just simplifying and automating deployments, it’s allowing you to maintain the equivalent of a git log for your infrastructure.

The Terraform registry

When you use a Terraform provider, Terraform will look for the provider in their registry. If it can’t find the provider, you have to set it up manually by following these instructions. The process is a bit tedious as those of you who used the Aiven Provider before its listing know all too well, i.e. downloading binary releases to your development machine and configuring your CI pipeline to do the same.

Now, it’s as simple as providing the name and version and Terraform will handle the rest.

Enough talking, show me the goods!

I will be the first to admit that I’m no wordsmith and the places my eyes jump to in a technical post are screenshots and code blocks. With that in mind, I’ll spare you the ordeal of scrolling past my thoughts on vim compared to IDEs and I will get stuck in with an example.

In this (rather simple) example, I’ll use my Aiven account to deploy a Kafka cluster (with Kafka Connect enabled), an OpenSearch cluster and corresponding Sink Connector that will push every message in a topic of my choosing into OpenSearch. And I’ll do it in...less than 80 lines. More importantly, I’ll do it in a way that you can just run the code in this post with ease.

1. Set up your directory

We’ll have the following setup so make that directory anywhere you like:

aiven_kafka_es/ main.tf variables.tf

2. Write the main file

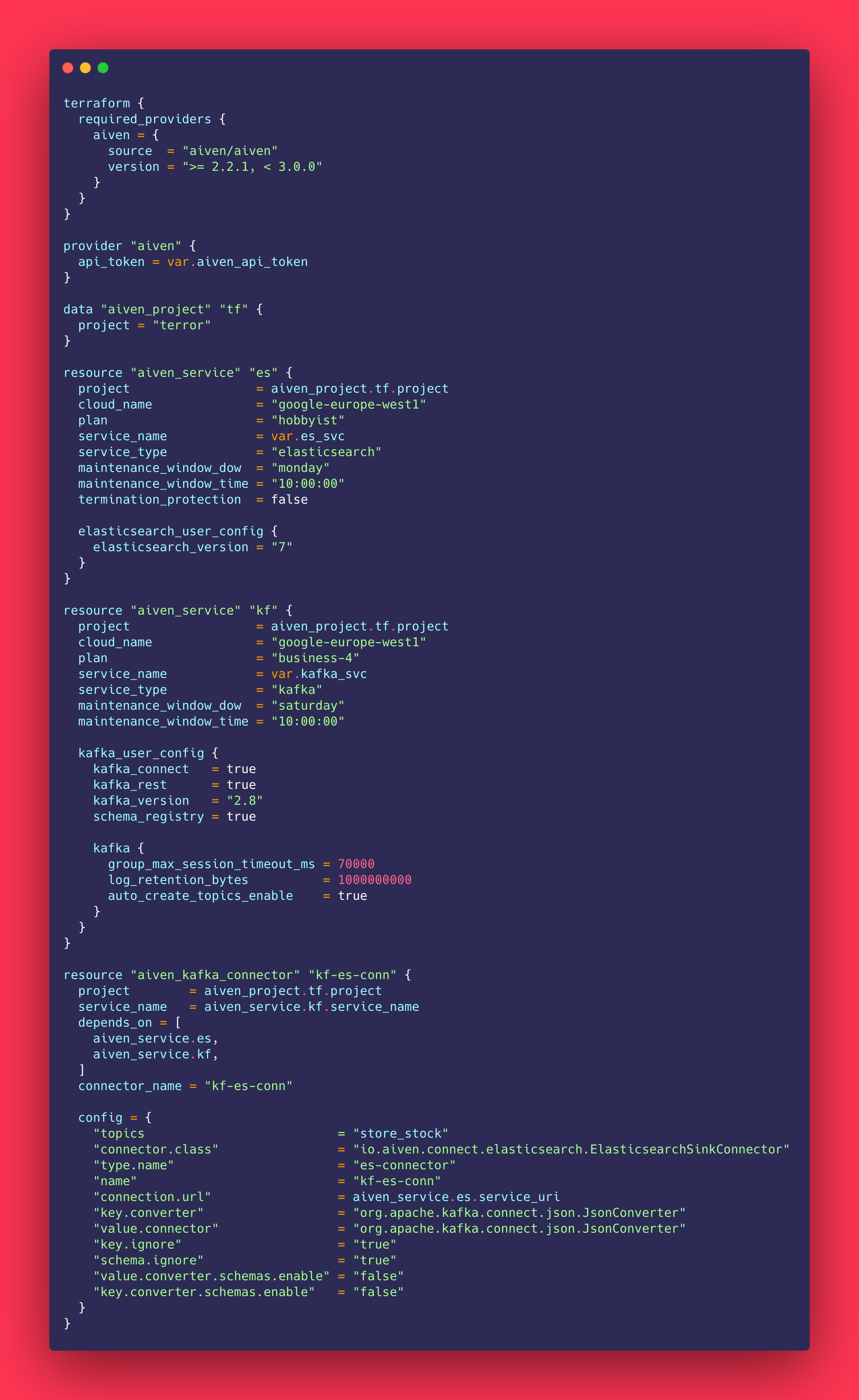

The structure of an HCL file is in blocks and the following blocks are needed:

Then we can get started with our resources (or we can use data for existing resources but we will get to that). If you’ve been living under an abandoned Hermit Crab shell and haven’t yet heard of Aiven, we provide managed open-source software for data pipelines. Deploying Services requires a Project and you can create everything within Terraform or through the Web Console. For the fun of it, let's do it all from here.

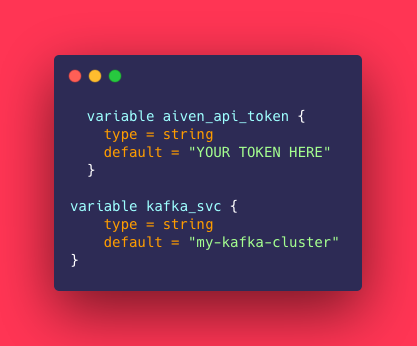

First, we will need an API Token. Log in (or sign up and get $300 of free credits) and navigate to your user profile (the User icon in the top right) to create a new Authentication Token. Copy the code and let's put it into variables.tf. While we’re there, let's add a name for the Kafka cluster we want to make:

Done! Let's create a project and deploy a Kafka cluster somewhere around the world. Append this to your main.tf and we’re good to go.

So, we’ve created a project and deployed a Kafka cluster inside GCP to the Europe region. The configuration object we put here also allows us to provide settings to override defaults (like the version we want or integrations we want to enable). Don’t worry about the numbers if this is all new to you, this is mainly to show that (almost) all of the features we have in our API are available in our Terraform provider.

Do you realise what that means!?! In a single script, you can deploy a whole suite of clusters AND configure Access Control Limitations AND more advanced configurations for each service (like enabling pgBouncer for Postgres or updating a schema inside our Kafka Schema Registry, Karapace).

3. Run it

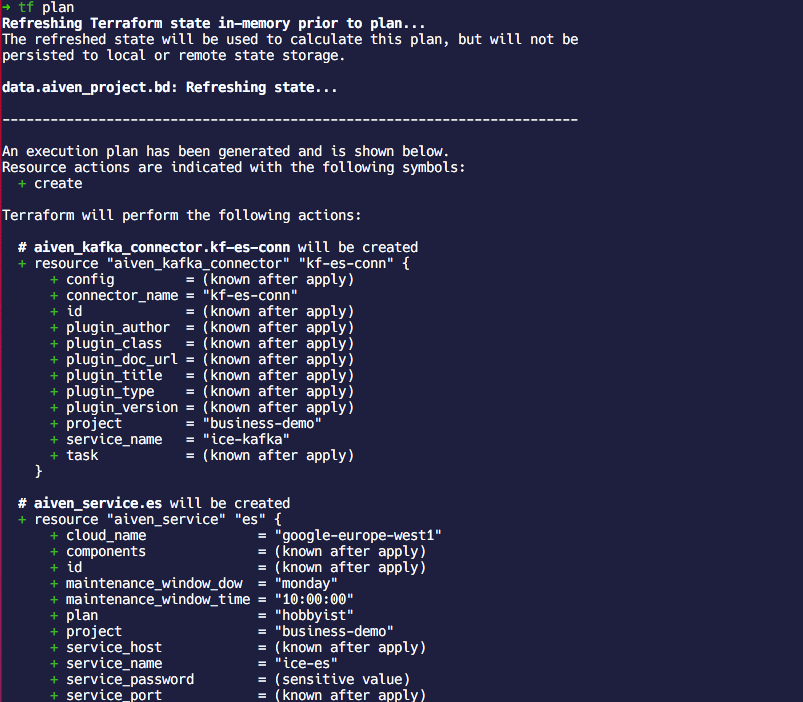

So, let's make it happen! We just write terraform plan (terraform init first for those of you who haven’t done so) and you’ll see an output showing you what’s going to change or happen. The printout is quite long but you should see a snippet that looks like this:

If you don’t, you’ll see errors with the line number, file and reason for failure. I’m certain all went well so now we want to tell Terraform to do the deploying already!

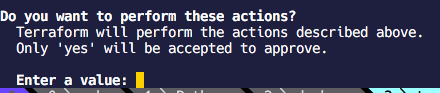

Running terraform apply will show you the same result as plan (hopefully) and then ask for you to approve it. If you agree, type yes and hit enter. Do some squats as your requests go through the Interwebs and we work our magic or, if you’re very impatient, head over to the Web Console and watch your new services spawn.

4. What about OpenSearch and Kafka Connect?

Wow, you have a Kafka cluster running but what the heck are you going to do with it? How about filling it with the works of Shakespeare and then indexing it with OpenSearch? Sure, it’s one option. But you’ll need OpenSearch and some form of connection from Kafka to this OpenSearch, some kind of...Kafka Connector?

Adding this to our main.tf will create an OpenSearch instance in AWS and use the Kafka Connect we enabled with our Kafka Cluster to configure a sink connector (a piece of software that takes data FROM Kafka and dumps it TO somewhere, depending on your configuration).

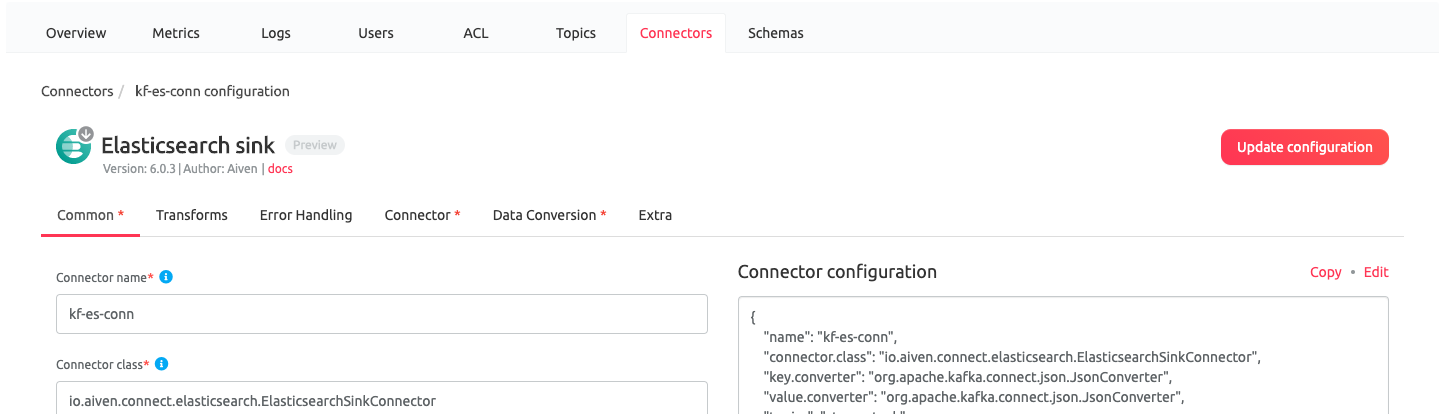

The config block for the connector might look complex, but don’t worry, you can create this in our Web Console by clicking on your Kafka Service, going to the Connectors Tab and choosing the OpenSearch Sink Connector. You can complete the form to generate a configuration and just copy the JSON to that block (swapping the : for =).

5. Run it, part deaux!

Same as above, you will notice you have a file called terraform.tfstate in your directory and Terraform already knows that you created a Project and a Kafka cluster. Running terraform apply again should show you that 2 resources are to be added.

Type yes and you are good to go. Some more squats and now you have 2 services connected in your Aiven Project. What are you still doing here? Go and publish some things to that cluster and check it out in OpenSearch!

N.B. existing users

You CAN do a straight upgrade to switch to the Terraform Registry provider by deleting terraform.d in your directory and updating the terraform block. BUT, you will also need to run

terraform state replace-provider registry.terraform.io/-/aiven registry.terraform.io/aiven/aiven

If you are using Terraform for mission critical services, it goes without saying that great care must be used to avoid incorrectly classifying a service or some other such fun.

Bringing it all together

What the heck have we just done?

-

Instantiated an Aiven provider inside Terraform and added our API token

-

Created a

resourcefor our Project and given it a name -

Created 2 service

resourcesfor Kafka and OpenSearch where we provided the following:service_nameprojectcloud_nameplanservicetype

-

Created a Kafka Connector

resourcefor an OpenSearch Sink Connector and provided theconfigfor that connector to link the creatively named topicstuff_topicto our OpenSearch so that it consumes any message that gets produced to that topic.

Further reading

Take a look at our two-part post showing more things you can do with Terraform:

- Aiven databases and Terraform for fun and profit

- Observe your PostgreSQL metrics with Terraform, InfluxDB and Grafana

Wrapping up

In two years, we’ve managed to get our Aiven Terraform provider listed in the Terraform registry and added loads of new functionality. What does this mean? Setting it up is now a breeze and you can do even more through it than you could when we released the first version.

Two years has flown by, so I also thought it’d be nice to show those of you who might’ve missed the Infrastructure as Code movement what Terraform is and demonstrate just how powerful it is with some of the slick tricks you can do with it when managing your Aiven services.

That’s all I’ve got for now; let’s agree to meet back here in another two — who knows what’ll be in store by then. In the meantime, follow our changelog RSS feed, or on Twitter or LinkedIn to stay up to date.

Table of contents

- Aiven’s Terraform provider — two years later.

- What is Terraform, really?

- So, what does it do?

- The Terraform registry

- Enough talking, show me the goods!

- 1. Set up your directory

- 2. Write the main file

- 3. Run it

- 4. What about OpenSearch and Kafka Connect?

- 5. Run it, part deaux!

- N.B. existing users

- Bringing it all together

- Further reading

- Wrapping up

Stay updated with Aiven

Subscribe for the latest news and insights on open source, Aiven offerings, and more.