Jan 10, 2023

Why you should think about moving analytics from batch to real-time

Learn the disadvantages of batch analytics, the benefits of switching to real-time, and how to adopt a tech stack that supports real-time analytics.

Ben Gamble

|RSS FeedEnterprise & Solutions Marketing Lead at Aiven

What was once a pipe dream is now a reality: advances in technology over the past decade have allowed businesses to harness the power of real-time data. However, while over 80% of businesses say transforming to a real-time enterprise is critical to meeting customer expectations, only 12% have optimised their processes for real-time customer experiences, according to 451 Research data.

Real-time data is not just a nice-to-have, but a must-have for businesses to stay afloat in the “right now” economy. There are still some clear use cases for batch, for example, payroll or billing typically involve processing a large number of transactions on a regular basis. Batch processing allows companies to efficiently process payroll transactions in a single batch rather than processing them individually in real-time. However, more companies are switching to real-time in cases where getting and analysing continuous data really matters.

In this article, we’ll evaluate batch and real-time analytics, look at why switching from batch processing to real-time can prove useful, and how to move from a traditional tech stack to one that supports real-time analytics.

Let’s dive in.

The problems with batch data processing

Batch analytics refers to the processing and analysis of a high volume of data that has already been stored for a period of time. For example, companies process their financial reports on a quarterly and monthly basis.

As computers became more powerful, though, batch systems started delivering data faster and faster — first in weeks, then days, then hours. With batch data processing, you might end up with data inconsistencies, as it may not reflect the most up-to-date state of the data. For example, if a batch process is run at night to update a database, any changes made to the data during the day will not be reflected in the updated database until the next batch process is run.

In nearly every case, it’s more valuable to have an answer now than it is to have an answer next week.

In fact, data can lose its value even when it’s just a couple of milliseconds or microseconds old. Take financial instruments: it pays to know the current price of one down to the nanosecond.

What’s most important today is your data’s freshness, latency, and value. We’ve come to the point of needing data as it happens, meaning real-time is now the norm. Newer technologies like Apache Kafka® have replaced the traditional extraction, transformation, and loading (ETL) process. Now that companies can deliver huge amounts of data in near real-time, there’s less need for batch processing and delays with delivery.

We’ve come this far and there’s no going back with user and customer experience. There’s less and less room for slow analytics when nearly every industry requires real-time decision making.

While real-time used to be a specialised version of batch, batch is becoming a specialised version of real-time. And there are risks to not taking advantage of real-time analytics.

Why moving from batch to real-time analytics is a good idea

Build resilient data pipelines

Since we’ve come to rely on real-time analytics, resilience is absolutely paramount. Real-time data pipelines are resilient at heart, meaning they can easily adapt in the event of failures. While real-time data processing is more technically challenging, it's much easier to change a real-time calculation.

With batch, your master file might not be up to date and it’s risky if you mess up. Even minor errors, like typos, can bring your batch process to a halt. If you collect data for a few hours and one batch fails, then the next one will be double the size. If your machine isn’t big enough to store all the data, then you need to scale your machines, which can be quite chaotic.

With real-time, information is always up to date and it’s much easier to detect anomalies. Companies are moving away from passive risk mitigation to active risk management thanks to real-time analytics. For example, you can detect fraud signals in real-time and stop fraudulent transactions from completing.

By switching to real-time, you’re better placed to depend on the accuracy and security of your data. And, of course, your processing time will also be faster.

Your competitors are already riding the real-time wave

Once a company goes real-time, everyone else has to play catch up. Whether you like it or not, real-time is the direction the world is going. By 2025, nearly 30% of all data will be consumed in real-time, and the transformation is already well underway.

You’ve likely heard the famous quote attributed to Henry Ford: “If I had asked my customers what they wanted, they would have said faster horses.” Not transitioning to real-time is the equivalent of saying “We're gonna stick with horses.” By sticking with batch, you are choosing to fight a much harder battle than you need to.

Moving to real-time analytics helps you gain a competitive advantage and make new discoveries that could help grow your business.

Your efficiency will skyrocket

Companies using real-time analytics see huge efficiency gains.

Consider how much real-time has already driven a boom in productivity: we wouldn’t have food delivery apps, the gig economy, or Uber without real-time systems. Sure, ordering a taxi for tomorrow is great, but it's not the same as ordering one right when you need it.

With real-time analytics, warehouses can streamline their operations and monitor the conditions of their goods. They can ensure factories won’t run out of equipment or raw materials at the wrong time, and predict when a machine is about to overheat so that they can divert some workload to a different factory.

Or let’s say you have a pharmaceutical supply chain and you notice one product is out of stock in Germany — but just over the border in Switzerland, you have a surplus. Rather than only finding out about the surplus in Switzerland later, real-time analytics allow you to send the products to Germany the moment they’re needed there.

When it comes to efficiency, the power of real-time analytics benefits nearly every industry.

Increase your revenue

71% of technology leaders agree that they can tie their revenue growth directly to real-time data, according to the State of the Data Race 2022 report.

While batch processing comes with a lower up-front investment, companies can actually cut costs with real-time since aggregating and joining data before ingestion requires less storage and processing. You can also perform serverless computing with real-time, where you only pay for compute time when you need it. Tools like AWS Lambda® allow you to cut costs and run applications during times of peak demand without crashing or over-provisioning resources.

The main cost with real-time is the opportunity cost to set it up, and the return on investment is potentially huge.

Major payment companies, for instance, save millions per day in revenue potentially lost to fraud with the help of real-time analytics. E-commerce companies can also increase their average cart transaction size with personalized recommendations, and reduce the number of abandoned carts with reminder emails.

Every second wasted can cost millions for today’s data-driven production lines. For example, if you have a forging production line, you can’t afford to make slow decisions. The metal equipment has to stay hot, and if it gets cold, you can lose millions of dollars per second. You don’t want to make the wrong call, have to shut down production for 10 minutes, and suddenly find yourself losing hundreds of millions of dollars due to delayed data collection.

Empower data analysts to do their jobs well

Real-time data empowers data analysts with a more complete and accurate picture of the data they are working with, which can be very helpful in their jobs.

If you've spent thousands of hours and millions of dollars a year hiring a team of smart people whose jobs are to analyse your data and share the results, why would you limit them to only considering the situation every 20 minutes?

Livestream: Kafka with Tiered Storage

Tiered Storage, a feature of Apache Kafka 3.6 which allows the offloading of data to object storage in the cloud, is the game-changer you’ve been waiting for.

Watch the livestreamHow to move from batch to real-time analytics

Keep in mind that when switching from batch to real-time, you don’t lose a thing. You’re not trading in batch for real-time. Instead, you’re starting at a better place with a real-time system, and you could always add your batch system back on top if you still need it.

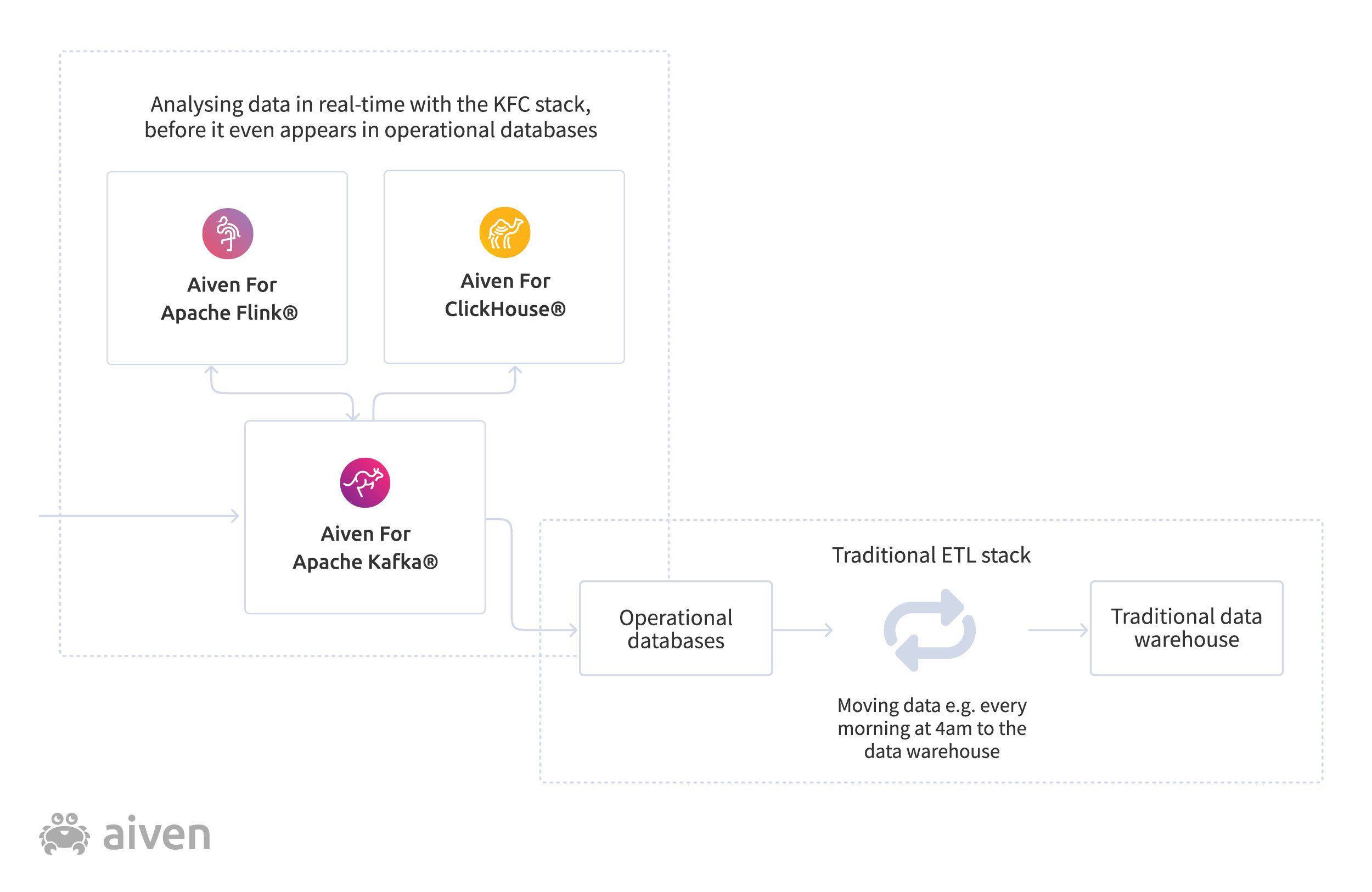

To make the switch to a real-time analytics pipeline, use open-source tools like Apache Kafka®, Apache Flink®, and ClickHouse® (KFC). The KFC stack allows you to build up a robust, scalable architecture for getting the most from your data, whether it be batched ETL or real-time metrics.

By using real-time tools, you can denormalise the data into a database management system (DBMS) like ClickHouse to allow high speed access to the joined-up data.

Many other tools are moving in the direction of real-time. Materialize, for example, offers a distributed streaming database that enables immediate, widespread adoption of real-time data for applications, business functions, and other data products. Israeli startup Firebolt allows you to deliver sub-second and highly concurrent analytics experiences over big and granular data.

Ultimately, moving to real-time does require more than just adopting new tools. It requires a change of mindset. Companies need to modernise their data architectures to move at machine-speed rather than people-speed.

The real-time tide will keep rising

When the Internet first arose some people thought it was just a passing fad, or an overhyped idea that would fizzle out. But look where we are today.

The same thing is happening now with real-time analytics.

While real-time technology will no doubt change in the coming years, the process itself isn’t going away. Rather, it will keep evolving to become even faster.

So, will you be left behind or will you switch to real-time?

Already using Apache Kafka®?

Read more why you should be analysing your streaming data, not just moving it around.

Make your streaming data work for youTo get the latest news about Aiven and our services, plus a bit of extra around all things open source, subscribe to our monthly newsletter! Daily news about Aiven is available on our LinkedIn and Twitter feeds.

If you just want to find out about our service updates, follow our changelog.

Further reading

Table of contents

- The problems with batch data processing

- Why moving from batch to real-time analytics is a good idea

- Build resilient data pipelines

- Your competitors are already riding the real-time wave

- Your efficiency will skyrocket

- Increase your revenue

- Empower data analysts to do their jobs well

- How to move from batch to real-time analytics

- The real-time tide will keep rising

- Further reading

Stay updated with Aiven

Subscribe for the latest news and insights on open source, Aiven offerings, and more.