Serverless event driven architecture with AWS Lambda functions and Apache Kafka®

Build serverless Event Driven Architectures (EDA) by combining Apache Kafka® with AWS Lambda functions. Learn how to trigger Lambda functions based on events flowing in an Apache Kafka topic

Despite a number of tools available to perform stream processing like Apache Kafka® Streams or Apache Flink®, sometimes you want to write some serverless code or functions to process events. You can do this with AWS Lambda, letting you concentrate on wiring the parsing logic without having to worry about managing or scaling the compute.

In this tutorial we'll cover the basics of how to invoke a Lambda function using an Apache Kafka trigger.

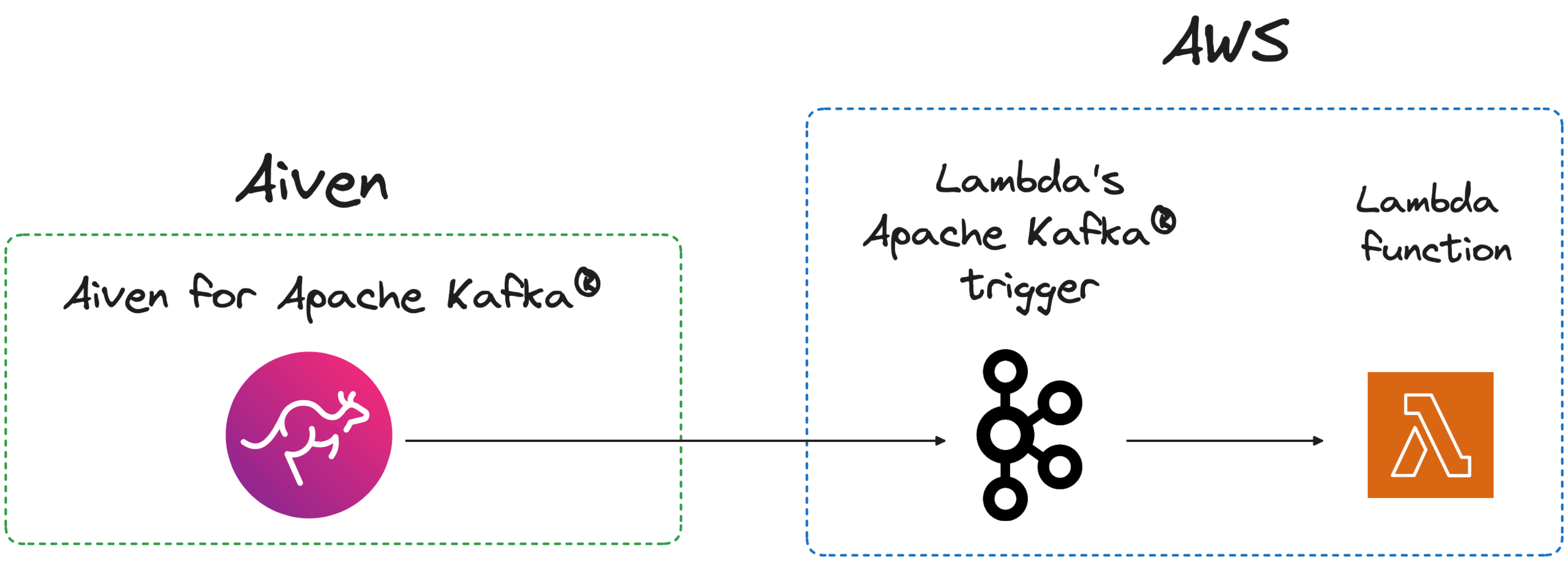

The Components

We'll use AWS Lambda triggers, in particular the Apache Kafka trigger, to consume from a specific Kafka topic and invoke the Lambda function on every event (or batch of events).

Getting started with Apache Kafka

Lambda's Apache Kafka trigger works with any Apache Kafka cluster, for simplicity this tutorial will showcase how to create one with Aiven by:

- Signup into Aiven Console

- If you are creating a new account, select if it's Personal or Business and give the project a name

- At Services, under Create new service choose Apache Kafka

- To finish creating your Apache Kafka® service, choose the version (default is fine), and AWS as your cloud provider.

- Create the Apache Kafka service in the same region as where you'll want to run the AWS Lambda function to minimize the latency.

- Click on Create service

Click Skip this step to jump directly to the Apache Kafka service details while the service builds in the background. Enable the Apache Kafka REST API (Karapace) toggle so we can messages in a Kafka topic using the Aiven Console.

When the cluster is up and running, navigate to the Topics tab and create a topic called test. Click on the topic name to view it, then click Messages to consume and produce messages in the topic.

Store Apache Kafka credentials in AWS Secrets Manager

The next step is to define a secure link between Aiven for Apache Kafka and the AWS Lambda function. Aiven for Apache Kafka offers a client certificate authentication method out of the box we can store the secrets in AWS Secrets Manager by:

- Logging in the AWS Console and accessing the AWS Secrets Manager

- Clicking on Store new secret

- Select Other type of secret as Secret type

- Select the Plaintext editor and include the following JSON

{ "certificate":"<ACCESS_CERTIFICATE>", "privateKey":"<ACCESS_KEY>" }

The <ACCESS_CERTIFICATE> and <ACCESS_KEY> are respectively the Access certificate and Access key you can find in the Aiven Console, under the Aiven for Apache Kafka service Overview tab.

The access certificate should start with -----BEGIN CERTIFICATE----- and end with -----END CERTIFICATE-----, the whole content should be included.

The access key should start with -----BEGIN PRIVATE KEY----- and end with -----END PRIVATE KEY-----, the whole content should be included.

- Click Next

- Define a secret name (e.g.

prod/AppLambdaTest/Kafka), a description, tags and resource permissions - Click Next

- Define the rotation configuration and click Next

- Review the secret details and click Next

The secret contains the Aiven for Apache Kafka access certificate and key. We need to do the same set of steps to store the CA certificate. The JSON format for this second secret is:

{ "certificate":"<CA_CERTIFICATE>" }

The <CA_CERTIFICATE> is the Access CA you can find in the Aiven Console, under the Aiven for Apache Kafka service Overview tab. You need to include everything from the -----BEGIN CERTIFICATE----- to the -----END CERTIFICATE----- including the two opening and closing strings.

Name the CA related secret prod/AppLambdaTest/KafkaCA

Define a Lambda function

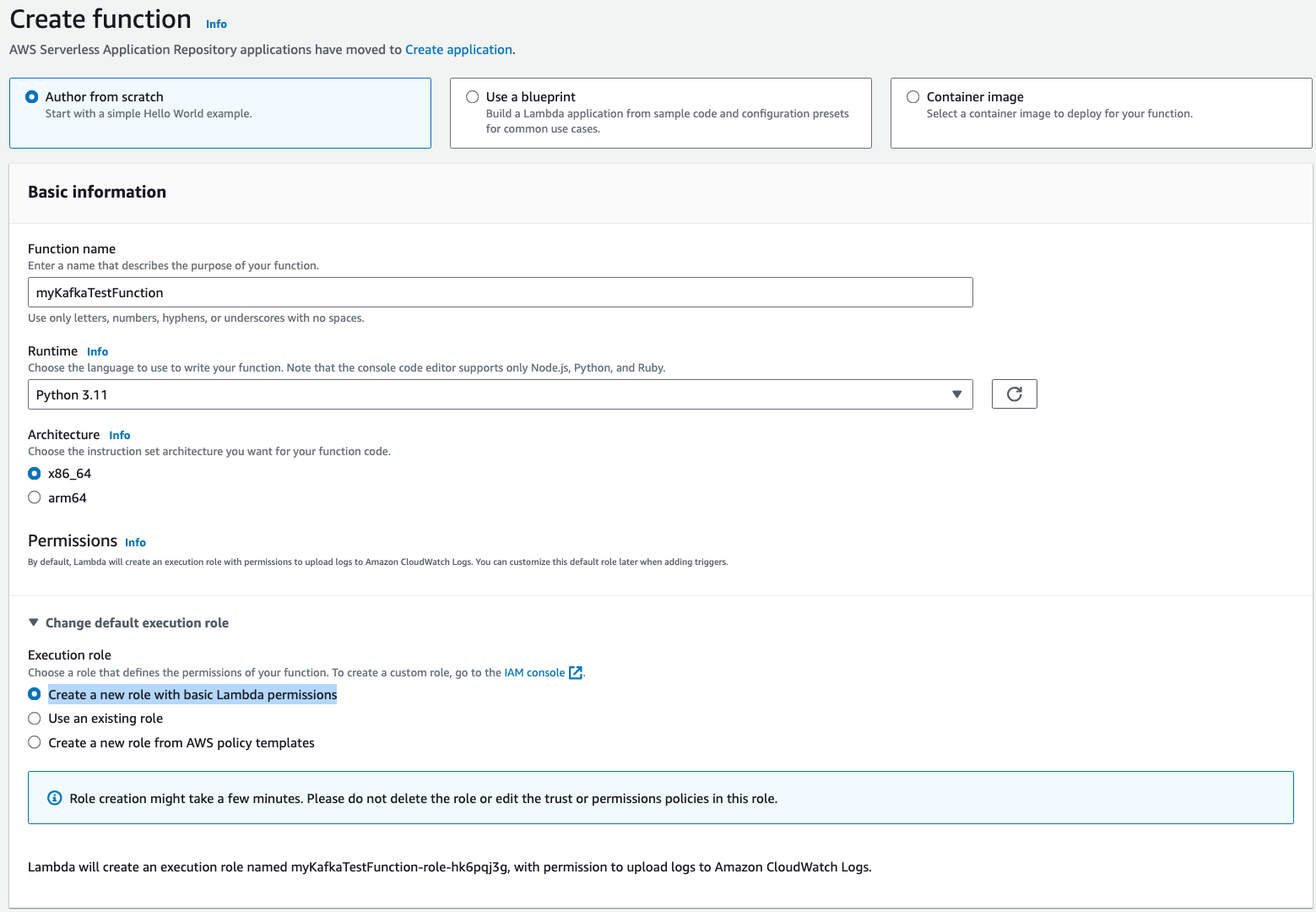

The next step is to define the Lambda function to perform some simple logging of the events coming in. We can create a Lambda function by:

- Go to the AWS Lambda service page

- Click Create function

- Select Author from scratch

- Give the function a name

- Select

Python 3.11as Runtime - Select

x86_64as Architecture - In the Permissions section select Create a new role with basic Lambda permissions

- Click on Create function

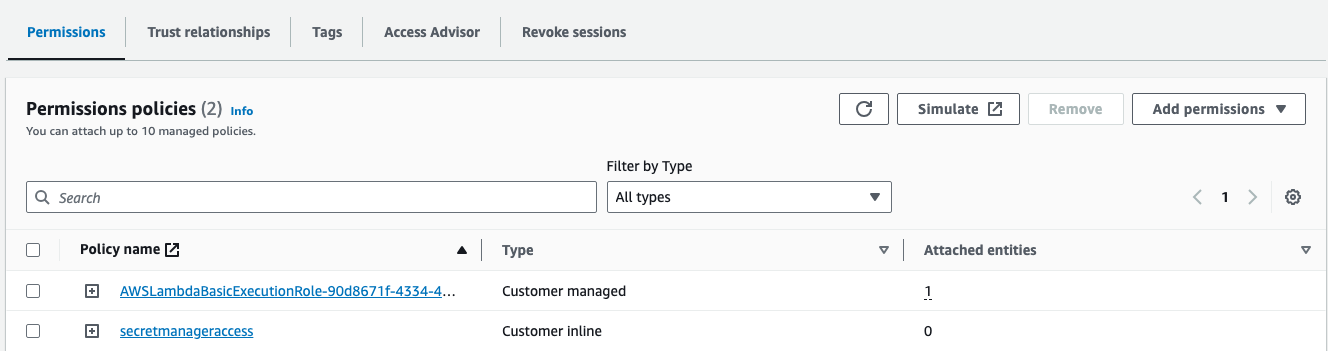

Enable the new AWS role to access the Apache Kafka secrets

The basic role created during the Lambda definition allows only CloudWatch logging by default. We need to enable the role to read the secrets containing the Apache Kafka certificates needed to connect to the Aiven service. We can do that by:

- In the AWS Lambda function details page, click on the Configuration tab

- Select the Permission section

- Click on Edit

- Click on the View the

<ROLENAME>link at the bottom of the page to edit the newly created role (the<ROLENAME>is a string including the Lambda function name, therolestring and a random string suffix), this opens AWS Identity and Access Management (IAM) - Click on Add permissions and select Create inline policy

- Type Secrets Manager in the Select a service search screen and select Secrets Manager

- Expand the Read section and select the

GetSecretValue - In the Resources section, optionally filter the AWS Amazon Resource Names the role will be able to access or select All to allow the role access all secrets.

- Give the new policy a name

- Click on Next and Create policy

The new inline policy is added to the newly created role.

Create the Lambda trigger pointing to Apache Kafka

Back on the AWS Lambda service page where the newly created function has now all the privileges to access the secrets needed to connect to Apache Kafka. We can setup the trigger by:

- In the Function overview click on + Add trigger

- Select Apache Kafka

- Under the Bootstrap servers click on Add and include Aiven for Apache Kafka bootstrap server that you can find in the Aiven Console, service page, overview tab.

- Set

1as batch size, so the function is called on every event - Set

testas topic name - Select

CLIENT_CERTIFICATE_TLS_AUTHas Authentication - Select the secret containing the access certificate and key in the Secrets Manager key

- Select the secret containing the CA certificate in the Encryption

- Click on Add

Write the Lambda logic

The last step in our journey is to define what the Lambda function should do. To define that we need to:

- In the AWS Lambda service page for the lambda function, navigate to the Code tab

- Write the following in the lambda_function section

import json import base64 def lambda_handler(event, context): partition=list(event['records'].keys())[0] message=event['records'][partition] for data in message: stringvalue = base64.b64decode(data['value']) value=json.loads(stringvalue) print('pizza ' + value['pizza']) print('name ' + value['name'])

In the above code we:

- include

jsonto parse the JSON values to push to the kafka topic - create the partition

partition=list(event['records'].keys())[0]. To retrieve the name of the partition, check the details of an example event in the AWS documentation - Get the messages and the value session (

data['value'], then print thepizzaandnamefields, after decoding frombase64.

It's time to run the function with the Deploy button.

Check the pipeline results

To check if the pipeline is working, we need to:

- Push records in the Apache Kafka

testtopic - Check in the logs that the messages are printed

We can test it by:

- In the Aiven Console, navigate to the Aiven for Apache Kafka service

- Click on the Topic section

- Click on the

testtopic - Click on the Messages button

- Click on Produce message button

- Select json as format

- Select the Value tab

- Paste the following into the main editing area

{ "pizza":"Pepperoni", "name": "Frank" }

To check that the Lambda function works:

- In the AWS Lambda page, navigate to the Monitor tab

- In the Metrics subtab, you should see the number of invocations, success and error rates

- In the Logs subtab, you should be able to check the log details. Opening the latest logs you should see an entry like the following

pizza Pepperoni name Frank

Scalable event driven architecture with AWS Lambda and Apache Kafka

AWS Lambda functions are a widely adopted by developers to ingest and parse a scalable amount of data without having to pre-provision a dedicated service.

The combination between Lambda functions and Apache Kafka offers an interesting option for building scalable, serverless, event driven architectures. It lets you read data from Apache Kafka without having to code an application or deploy a service specific to that. This reduces your overall infrastructure cost and the complexity of your system.

Table of contents

- The Components

- Getting started with Apache Kafka

- Store Apache Kafka credentials in AWS Secrets Manager

- Define a Lambda function

- Enable the new AWS role to access the Apache Kafka secrets

- Create the Lambda trigger pointing to Apache Kafka

- Write the Lambda logic

- Check the pipeline results

- Scalable event driven architecture with AWS Lambda and Apache Kafka