Mar 17, 2021

Data and disaster recovery

We don’t like to think about disasters, but sometimes they just happen. Find out how you should prepare your data for the worst, even while hoping for the best.

Disaster recovery is a strange topic in the world of security and compliance. There is a lot of conversation about it, but often little gets done in practical terms. This may be because for most organisations, it’s perceived as too expensive or too remote. In normal circumstances, a true disaster is a High Impact - Low Frequency (HILF) event and something in the human psyche prefers to focus on Low Impact - High Frequency (LIHF) events. This is why you tend to worry more about burning dinner than getting hit by a meteorite.

When you design your data storage and manipulation systems, the most common route to take is to consider redundancy: RAID storage, clustered/load-balanced servers and so on. What receives much less consideration is resiliency, which is relevant to HILF events: fault recovery and persistent service dependability.

Choose wisely

One of Aiven’s core features is choice. We provide multiple tiers of systems, from small setups with little to no redundancy (Hobbyist Aiven for PostgreSQL) all the way to big plans affording advanced redundancy and resiliency (Premium Aiven for PostgreSQL with extra geographically dispersed read replicas, or Aiven for Apache Kafka with MirrorMaker. At Aiven, you can find just the right service mix for you. The flipside is that you have to make the right choices when selecting one; you have to balance the risk with the financial costs, and be informed about it.

The underlying technology that creates the Aiven platform is (perhaps unsurprisingly) built in part on Aiven products. We utilize all of the advanced redundancy and resiliency features to ensure the durability and persistence of Aiven services. Replication of data and services to multiple continents across a large number of systems and disks with regularly tested backup and restore procedures are table stakes for us. And remember, these are all features we also offer to you, our customers. We keep your infra and your data as safe as your own data architecture lets us.

So far so theoretical. By now you’re probably asking “what’s that got to do with me?” and “what should I be doing?” And maybe even “what do you mean my own data architecture?”

Making a data recovery plan

This section tells you what to do to maintain business continuity in the face of a disastrous event.

The key is to design your data architecture in such a way that it is safe from both LIHF and HILF events. Remember, Aiven can keep your infra and data safe, but even though we can “see” that you have two Postgres services running in different availability zones, we can’t know what you keep in them--whether they’re separate databases or whether they’re the same database replicated safely across AZs.

Step 1: Ensure system-level redundancy

This is not something you need to worry about with Aiven: we take care of this for you. In a future post we'll cover how we set up your instances smoothly and securely across clouds but, in case you urgently need details, here are some quick tips.

RAID is a real-life array but, instead of containing numbers or strings, each disk is a hard drive containing your critical system data. As storage has become cheaper, using local NVMe drives with your compute instances is a very real option. Depending on your cloud, you can use "ephemeral" storage and create a RAID setup or you can leave all of that to be managed by the cloud provider; i.e. using EBS with AWS.

You can also provide your own keys to encrypt the disks of your bare metal instance, with most cloud providers supporting this through their Command Line Tools or API. Often, they will integrate with the authentication services such as AWS KMS or GCP Cloud KMS.

Of course, this all depends on the operating system you run on your instances and your needs. For some it might be enough to use Networked Storage and backup snapshots to Object Storage regularly.

Step 2: Distribute HA plans securely

With more than 10 services, we know that High Availability (HA) has one conceptual definition but many, many implementations. Distributed services, like Kafka and Cassandra, have functions for HA baked right into their design whereas more common databases are happy to run as a single instance.

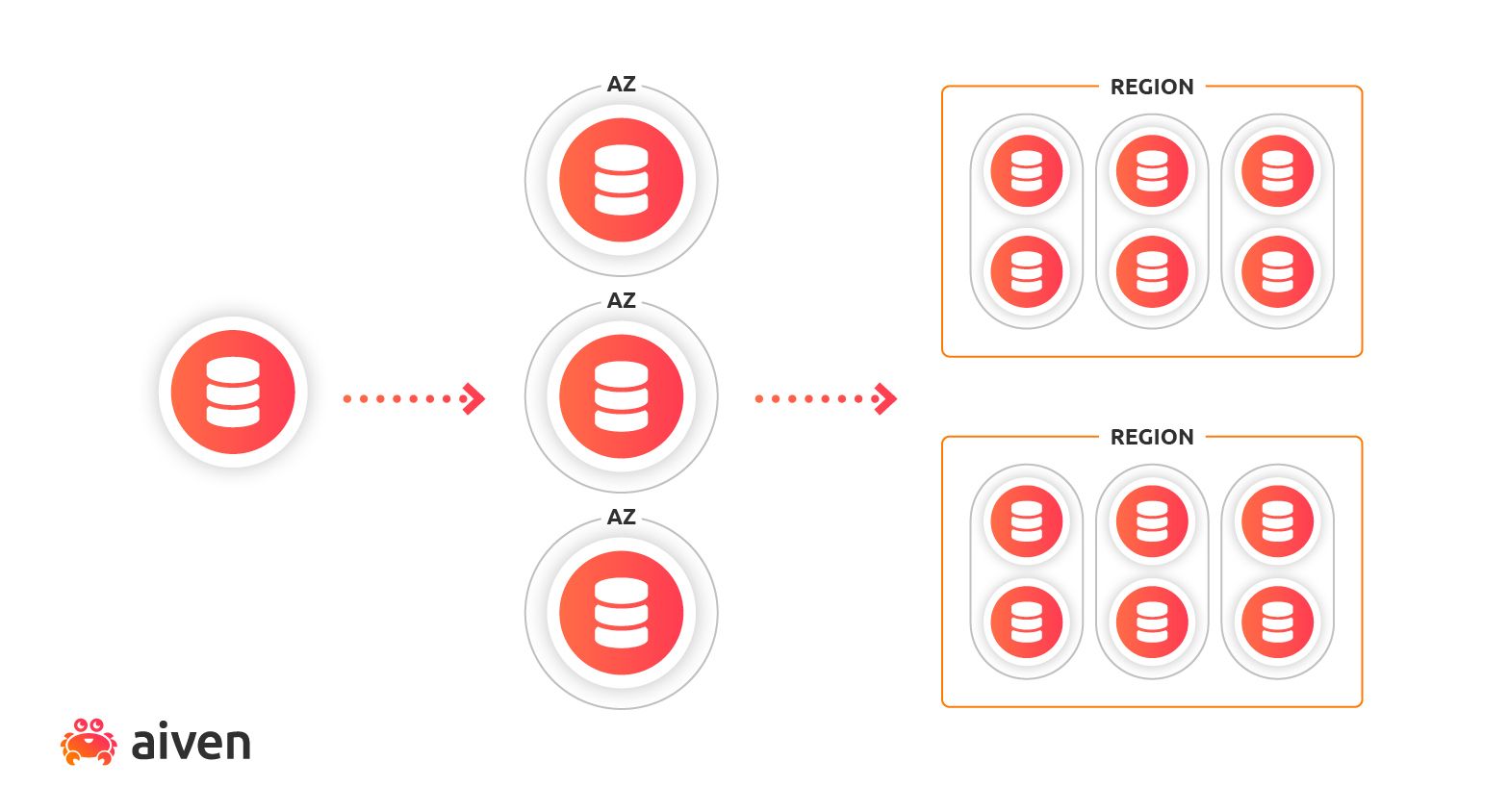

We support single node instances because we know that HA is less important for development environments. For production environments, our HA plans deploy instances in the region you choose, ensuring that they are spread across Availability Zones (AZ). If you launch Postgres in an HA setup, you will have your primary in one AZ and your standby in another. If you launch a 6 node Kafka cluster then we launch each instance into a different AZ until there are none left for that region, then we will deploy multiple instances to the same AZ. You don't need to worry about this, however, as Kafka is aware of these locations and will assign partitions so that data is spread across AZs as much as possible.

Backups for all of our database services are taken at different intervals and kept at different times, depending on the plan you have selected. Your backups are stored in Object Storage in the same region and can be used to restore your service to a particular point in time (PITR) or to create a fork of your service that is an exact clone of your current service but you can select the region and plan. This is particularly useful when you want to make a fork of your production database for your engineering teams.

Step 3: Set up read replicas and mirrored copies

Some of you may be thinking, "this is great but not so useful when I am working multi-cloud". A good point indeed. We have HA plans that are AZ aware, but multi-cloud is a different story.

In Postgres or MySQL, add a read replica to your service and deploy that into the cloud (and region) of your choice.

For Kafka, use the magic of MirrorMaker 2 (and 1), a service that is bundled with Open Source Kafka and allows you to replicate data from one cluster to another. Whether you want to run a one-off migration or if you have some geographically distributed clusters that you need to be in constant sync (either with either or with a large, centralised cluster), MirrorMaker is the optimal solution.

And for services that do not have such simple options, Kafka can come to the rescue! Through Kafka Connect, Aiven offers a wealth of open source connectors that can read from and/or push to a number of sources. This can be useful if you would like to move parts of your database into OpenSearch for analytics or to move data between InfluxDB using the HTTP API.

Further reading

For more information on how the Shared Responsibility Model of Cloud Computing needs to be considered in your organization’s disaster recovery planning, please see the Cloud Security Alliance Security Guidance for Critical Areas of Cloud Computing v4.0 in Domain 6 - Management Plane and Business Continuity section 6.0.1 Business Continuity and Disaster Recovery in the Cloud.

You can also have a look at what we think about Data security compliance in the cloud.

Next steps

Your next step could be to check out Aiven for PostgreSQL, Aiven for MySQL or Aiven for Apache Kafka.

If you're not using Aiven services yet, go ahead and sign up now for your free trial at https://console.aiven.io/signup!

In the meantime, make sure you follow our changelog and blog RSS feeds or our LinkedIn and Twitter accounts to stay up-to-date with product and feature-related news.

Stay updated with Aiven

Subscribe for the latest news and insights on open source, Aiven offerings, and more.